Out-of-sample Embedding

Contents

6.5. Out-of-sample Embedding#

Imagine you have a citation network of scholars publishing papers. The nodes are the scholars, and an edge exists in a given pair of scholars if they’re published a paper together.

You’ve already found a representation using ASE or LSE and you have a set of latent positions, which you then clustered to figure out who came from which university. It took a lot of computation time for you to get this representation - there are a lot of people doing research out there!

Now, suppose a new graduate student publishes a paper. Your network gets bigger by a single node, and you’d like to find this person’s latent position (thus adding them to the clustering system). To do that naively, however, you’d have to get an entirely new representation for the network: your latent position matrix is \(n \times d\), and it would need to become \((n+1) \times d\). Re-embedding the entire network with the new node added seems like it should be unecessary - after all, you already know the latent positions for every other node.

This section is all about this problem: how to find the representation for new nodes without the computationally expensive task of re-embedding an entire network. As it turns out, there has been some work done, and there is a solution that can get you pretty close the latent position for the new node that you would have had. For more details and formality, see [1].

Let’s make a network from an SBM, and an additional node that should belong to the first community. Then, we’ll embed the network and explore how to find the latent position for the additional node.

6.5.1. Data Generation#

import numpy as np

from graspologic.simulations import sbm

from graspologic.utils import remove_vertices

# Generate parameters

B = np.array([[0.8, 0.2],

[0.2, 0.8]])

# Generate both an in-sample network along with community memberships,

# and an out-of-sample node with the same SBM call

network, labels = sbm(n=[101, 100], p=B, return_labels=True)

labels = list(labels)

# Grab out-of-sample node

oos_idx = 0

oos_label = labels.pop(oos_idx)

# in-sample network

A, a_0 = remove_vertices(network, indices=oos_idx, return_removed=True)

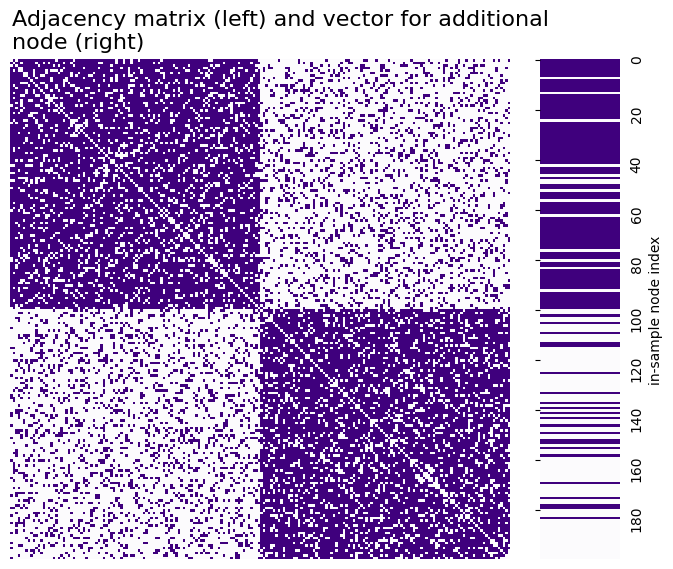

What we have now is a network and an additional node. The network with \(100\) nodes is called the “in-sample” network, because it is the network we are going to embed. The in-sample network is the collection of these 100 nodes and the edges amongst them. You can see the adjacency matrix for the network below, along with the adjacency vector for the additional out-of-sample node. An adjacency vector for an \(n\)-node network for a node \(i\) is a length-\(n\) vector with a 1 in every position that node \(i\) has an edge with another node, and a \(0\) in every position that node \(i\) does not have an edge with another node. The heatmap on the left is a network with two communities, with 100 nodes in each community. The vector on the right is purple on row \(i\) if the \(i^{th}\) in-sample node is connected to the out-of-sample node.

import matplotlib.pyplot as plt

import seaborn as sns

from graphbook_code import heatmap

from graphbook_code.plotting import cmaps

fig = plt.figure(figsize=(8, 5))

ax = fig.add_axes([0, 0, 1, 1])

# heatmap

heatmap(A.astype(float), ax=ax, cbar=False)

# adjacency vector

ax = fig.add_axes([.85, 0, .1, 1])

cmap = cmaps["sequential"]

plot = sns.heatmap(a_0[:, np.newaxis], ax=ax, cbar=False, cmap=cmap, xticklabels=False, yticklabels=20)

plt.tick_params(axis='y', labelsize=10, labelleft=False, labelright=True)

plt.ylabel("in-sample node index")

plot.yaxis.set_label_position("right")

# title

fig.suptitle("Adjacency matrix (left) and vector for additional \nnode (right)", y=1.1, fontsize=16, x=.19, ha="left");

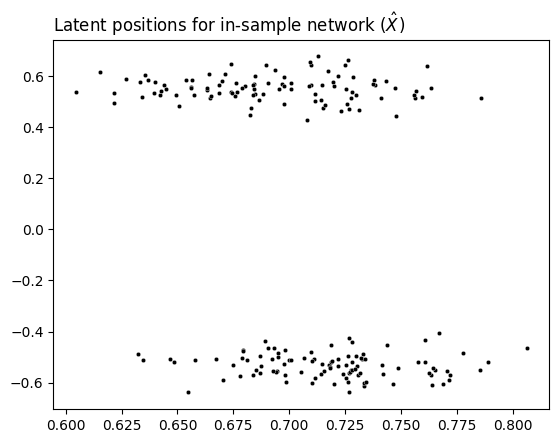

After embedding with ASE, we have an embedding for the in-sample network. The rows of this embedding contain the latent position for each in-sample node. We’ll call the embedding \(X\).

from graspologic.embed import AdjacencySpectralEmbed as ASE

ase = ASE(n_components=2)

ase.fit(A)

X = ase.transform(A)

from graphbook_code import plot_latents

plot_latents(X, title="Latent positions for in-sample network ($\\hat X$)");

6.5.2. Probability Vector Estimation#

Everything up until now has just been pretty standard stuff. We still haven’t done anything with our new node - all we have is a big vector that tells us which other nodes it’s connected to, and our standard matrix of latent positions. However, it’s time for a bit more exploration into the nature of the latent position matrix \(X\), and what happens when you view it as a linear transformation. This will get us closer to understanding the out-of-sample embedding.

Remember from the section on Random Dot Product Graphs that if the network is an RDPG, that the latent position matrix can be used to obtain the probability matrix, as \(P = XX^\top\), and \(p_{ij} = \vec x_i^\top \vec x_j\). When you use ASE on a single network to estimate the latent position matrix with \(\hat X\), \(\hat X\hat X^\top\) estimates \(P\): meaning, \((\hat X\hat X^\top)_{ij}\), the element on the \(i^{(th)}\) row and \(j^{(th)}\) column of \(\hat X\hat X^\top\), will estimate the probability that node \(i\) has an edge with node \(j\).

Let’s consider a single node \(j\) in our network. What is \(Xx_j\)? Written out mathematically, we end up with:

When we multiply a matrix by a vector, we multiply the rows of the matrix times the vector itself, so:

But remember for an RDPG, that \(p_{ij} = x_i^\top x_j\), so:

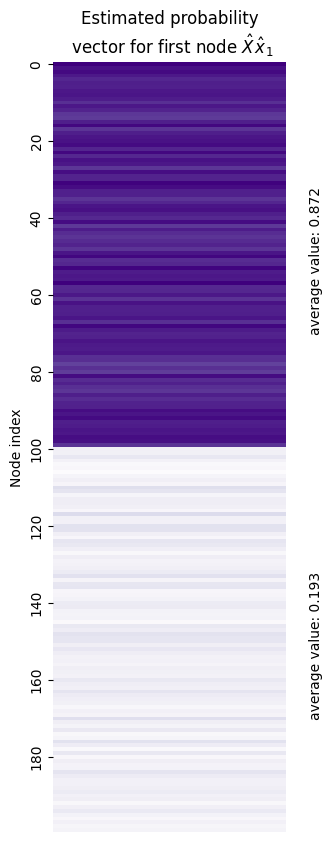

So it turns out that \(Xx_j\) is just a vector, whose elements correspond to the probability that node \(j\) is connected to any of the other nodes in the network! This means that \(p_j = Xx_j\), or that the probability vector for the node \(j\) can be represented as the product of the latent position matrix and the latent position vector for that node \(j\). When we instead use estimates of the latent positions, such as those produced by ASE, we just add hats to everything: \(\hat p_j = \hat X \hat x_j\), where the hats indicate that the quantities are estimates. In reality, when we estimate a probability vector in this way, we should enforce that \(\hat p_j\) has all elements between \(0\) and \(1\), since probabilities cannot exceed \(1\) and cannot be less than \(0\), but this is a finer technical detail.

You can see this in action below. We took the estimated latent position corresponding to the first node out of the latent position matrix (and called it \(\hat x_1\)), and then multiplied it by the estimated latent position matrix itself. What emerged is what you see below: a vector that shows the estimated probability that node 1 has an edge with each other node in the network. The true probabilities for the first half of nodes (the ones in the same community) should be 0.8, and the true probabilities for the second half of nodes in the other community should be 0.2. The average values we computed were 0.775 and 0.149 - so, pretty close!

x_1 = X[0, :]

x_est_proba = X @ x_1

fig, ax = plt.subplots(figsize=(3, 10))

sns.heatmap(x_est_proba[:, np.newaxis], cbar=False, cmap=cmap, xticklabels=False, yticklabels=20, ax=ax)

ax.text(1.1, 70, s=f"average value: {x_est_proba[:100].mean():.3f}", rotation=90)

ax.text(1.1, 170, s=f"average value: {x_est_proba[100:].mean():.3f}", rotation=90)

ax.set_ylabel("Node index")

plt.title("Estimated probability\n vector" + r" for first node $\hat X \hat x_1$");

6.5.3. Inversion of Probability Vector Estimation#

Remember that our goal is to take the adjacency vector for a new node and use it to estimate that node’s latent position without re-embedding the whole network. So far, we’ve essentially figured out how to use the node’s latent position to get an estimated probability vector.

Let’s think about the term “estimated probability vector” for a second. This should be a vector associated to node \(i\) with \(n\) elements, where the \(j^{th}\) element of the vector contains the probability that node \(i\) will connect to node \(j\). The thing we’re starting with for the out-of-sample node, however, is an adjacency vector full of 0’s and 1’s - 0 if there isn’t an edge, 1 if there is an edge.

As it turns out, this adjacency vector is going to behave sort of like the probability vector, with some caveats. On average, when the node the probability vector corresponds to has higher probabilities with other nodes, the adjacency vector will have more \(1\)s. Similarly, when the node the probability corresponds to indicates lower probabilities with other nodes, the adjacency vector will have more \(0\)s. For this reason, we are going to leverage the adjacency vector in place of an estimated probability vector when dealing with the out of sample node, since it is the best we have. This can be rigorously shown to be a reasonable step to take, as demonstrated by Tang et al. in their work.

The point here is that if you can start with a latent position and then estimate the edge probabilities, it’s somewhat equivalent (albeit going in the other direction) to start with an out-of-sample adjacency vector and estimated latent position matrix, and use these to estimate a node’s the latent position.

Let’s call the estimated probability vector \(\hat{p}_i\). We know that \(\hat p_i = \hat{X} \hat x_i\): you multiply the latent position matrix by the \(i_{th}\) latent position to estimate the probability vector (remember that the ^ hats above letters means we’re getting an estimate for something, rather than getting the thing itself). How do we isolate the latent position \(\hat x_i\)?

Well, if \(\hat X\) were invertible, we could do \(\hat{X}^{-1} \hat p_i = \hat x_i\): just multiply by \(\hat X\)’s inverse to get \(\hat x_i\) by itself. Unfortunately, in practice, \(X\) will almost never be invertible. We’ll have to do the next-best thing, which is to use the pseudoinverse.

We’ll take a brief break in the coming section to talk about the pseudoinverse for a bit, then we’ll come back and use it to estimate the out-of-sample latent position.

6.5.4. The Moore-Penrose Pseudoinverse#

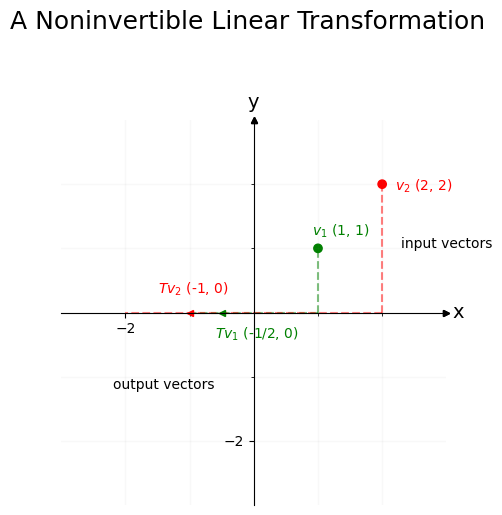

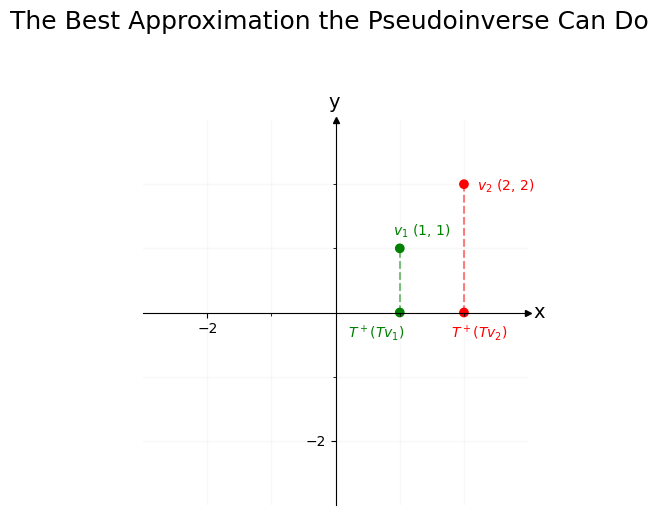

The Moore-Penrose Pseudoinverse is useful to know in general, since it pops up a lot in a lot of different places. Say you have a matrix \(T\) which isn’t invertible.

The pseudoinverse \(T^+\) is the closest approximation you can get to the inverse \(T^{-1}\). This is best understood visually. Let’s take \(T\) to be a matrix which projects points on the x-y coordinate axis down to the x-axis, then flips them to their negative on the number line. The matrix would look like this:

Some information is inherently lost here. Because the second column is all zeroes, any information in the y-axis can’t be recovered. For instance, say we have some vectors with different x-axis and y-axis coordinates:

When we use \(T\) as a linear transformation to act on \(v_1\) and \(v_2\), the y-axis coordinates both collapse to the same thing (0, in this case). Information in the x-axis, however, is preserved.

import numpy as np # v 1.19.2

import matplotlib.pyplot as plt # v 3.3.2

from graphbook_code import draw_cartesian

# make axis

ax = draw_cartesian()

# Enter x and y coordinates of points and colors

xs = [1, 2]

ys = [1, 2]

colors = ['g', 'r']

# Plot points

ax.scatter(xs, ys, c=colors)

# Draw lines connecting points to axes

for x, y, c in zip(xs, ys, colors):

ax.plot([x, x], [0, y], c=c, ls='--', lw=1.5, alpha=0.5)

ax.plot([x, -x], [0, 0], c=c, ls='--', lw=1.5, alpha=0.5)

# Draw arrows

arrow_fmt = dict(markersize=4, color='black', clip_on=False)

ax.plot((1), (0), marker='>', transform=ax.get_yaxis_transform(), **arrow_fmt)

ax.plot((0), (1), marker='^', transform=ax.get_xaxis_transform(), **arrow_fmt)

arrow_fmt = dict(markersize=4,clip_on=False)

ax.plot((-1/2), (0), marker='<', color="green", **arrow_fmt)

ax.plot((-1), (0), marker='<', color="red", **arrow_fmt)

# Draw text

ax.text(x=.9, y=1.2, s="$v_1$ (1, 1)", fontdict=dict(c="green"))

ax.text(x=2.2, y=1.9, s="$v_2$ (2, 2)", fontdict=dict(c="red"))

ax.text(x=-.6, y=-.4, s="$T v_1$ (-1/2, 0)", fontdict=dict(c="green"))

ax.text(x=-1.5, y=.3, s="$T v_2$ (-1, 0)", fontdict=dict(c="red"));

# input/output

ax.text(x=2.3, y=1, s="input vectors", fontdict=dict(c="black"))

ax.text(x=-2.2, y=-1.2, s="output vectors", fontdict=dict(c="black"));

plt.suptitle("A Noninvertible Linear Transformation", fontsize=18, y=1.1);

Our goal is to reverse \(T\) and bring \(Tv_1\) and \(Tv_2\) back to \(v_1\) and \(v_2\). Unfortunately, since both \(v_1\) and \(v_2\) get squished onto zero in the y-axis position after getting projected by \(T\), we’ve lost all information about what was happening on the y-axis – that’s a lost cause. So it’s impossible to get perfectly back to \(v_1\) or \(v_2\).

If you restrict your attention to the x-axis, however, you’ll see that \(Tv_1\) and \(Tv_2\) landed in different places (\(v_1\) went to -1, and \(v_2\) went to -2). You can use this information about the x-axis location of \(Tv_1\) and \(Tv_2\) to re-orient the x-axis values back to where they were prior to the vectors getting projected by \(T\), even if it’s impossible to figure out where the y-values were.

That’s what the pseudoinverse does: it reverses what it can, and accepts that some information has vanished. In this case, the pseudoinverse of \(T\) is:

import numpy as np # v 1.19.2

import matplotlib.pyplot as plt # v 3.3.2

# make axis

ax = draw_cartesian()

# Enter x and y coordinates of points and colors

xs = [1, 2]

ys = [1, 2]

xs_out = [1, 2]

ys_out = [0, 0]

colors = ['g', 'r']

# Plot points

ax.scatter(xs, ys, c=colors)

ax.scatter(xs_out, ys_out, c=colors)

# Draw lines connecting points to axes

for x, y, c in zip(xs, ys, colors):

ax.plot([x, x], [0, y], c=c, ls='--', lw=1.5, alpha=0.5)

arrow_fmt = dict(markersize=4, color='black', clip_on=False)

# Draw text

ax.text(x=.9, y=1.2, s="$v_1$ (1, 1)", fontdict=dict(c="green"))

ax.text(x=2.2, y=1.9, s="$v_2$ (2, 2)", fontdict=dict(c="red"))

ax.text(x=.2, y=-.4, s="$T^+ (T v_1$)", fontdict=dict(c="green"))

ax.text(x=1.8, y=-.4, s="$T^+ (T v_2$)", fontdict=dict(c="red"));

plt.suptitle("The Best Approximation the Pseudoinverse Can Do", fontsize=18, y=1.1);

6.5.5. Using the Pseudoinverse for Out-of-Sample Estimation#

Let’s get back to estimating our out-of-sample latent position.

Remember that we had a nonsquare estimated latent position matrix \(\hat X\). Like we learned before, we can get the estimated probability vector \(\hat p_i\) (the vector with its probability of connecting with node \(j\) in the \(j_{th}\) position) for a node by passing its estimated latent position (\(\hat x_i\)) through the estimated latent position matrix.

We can think of \(\hat X\) as a matrix the same way we thought of \(T\): it is the matrix corresponding to a linear transformation that eats a vector, and doesn’t necessarily preserve all the information about that vector when it outputs something (In this case, since \(\hat X\) brings lower-dimensional latent positions to higher-dimensional probability vectors, what’s happening is more of a restriction on which high-dimensional vectors you can access than a loss of information, but that’s not particularly important).

The pseudoinverse, \(\hat X^+\), is the best we can do to bring a higher-dimensional adjacency vector to a lower-dimensional latent position. Since the adjacency vector just approximates the estimated probability vector, we’ll use it as the estimated probability vector here. In practice, the best we can do generally turns out to be a pretty good guess, and so we can get a decent estimation of the latent position \(\hat x_i\):

Here, the \(\approx\) means “approximately”, in that it isn’t going to be exact (since it’s a pseudo-inverse), but in general, will suffice for our purposes.

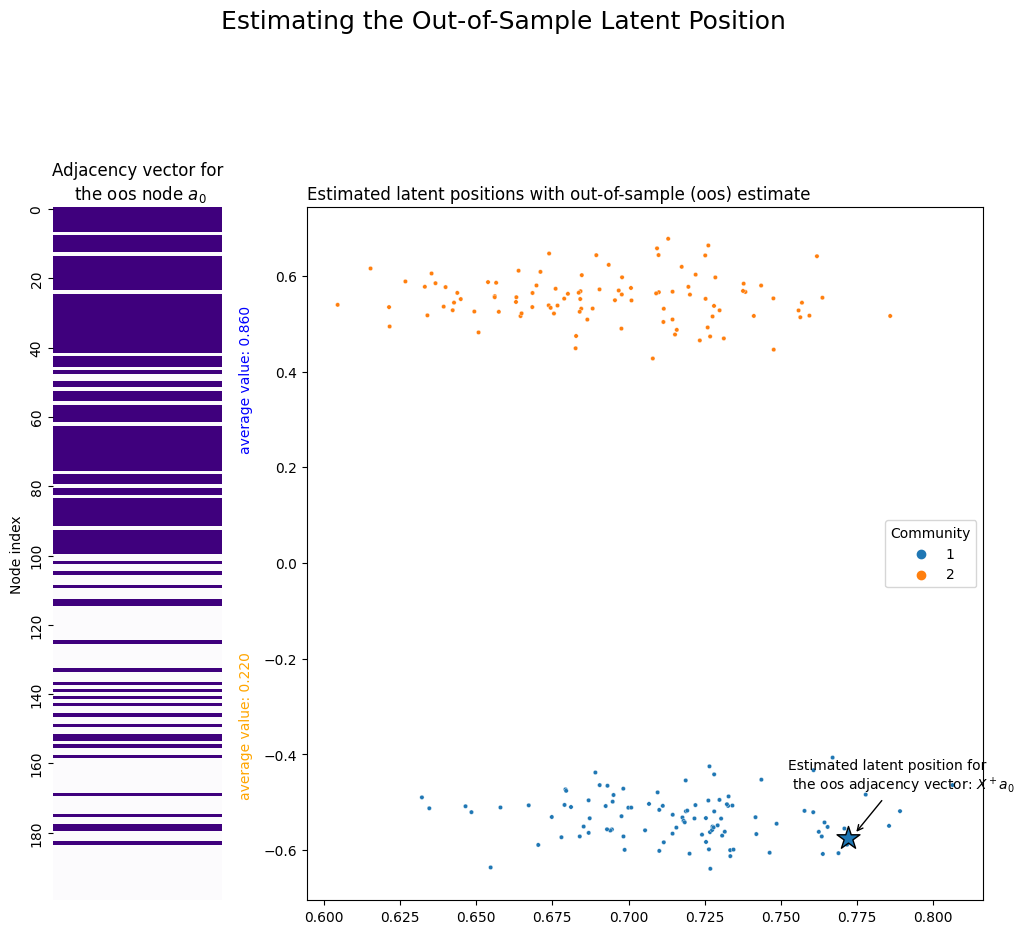

Let’s see it in action. Remember that we already grabbed our out-of-sample latent position and called it a_0. We use numpy’s pseudoinverse function to generate the pseudoinverse of the latent position matrix. Finally, we use it to get a_0’s estimated latent position, and call it x_0. You can see the location of this estimate in Euclidean space below: it falls squarely into the first community, which is where it should be.

from numpy.linalg import pinv

# Make the pseudoinverse of the latent position matrix

X_pinverse = pinv(X)

# Get its estimated latent position

x_0 = X_pinverse @ a_0

x_0

array([ 0.77218652, -0.5741383 ])

import matplotlib.gridspec as gridspec

np.random.seed(1)

# setup

fig = plt.figure(figsize=(12, 9))

gs = fig.add_gridspec(3, 4)

# adjacency vector

ax = fig.add_subplot(gs[:, 0])

sns.heatmap(a_0[:, np.newaxis], cbar=False, cmap=cmap, xticklabels=False, yticklabels=20, ax=ax)

ax.text(1.1, 70, s=f"average value: {a_0[:100].mean():.3f}", rotation=90, c="blue")

ax.text(1.1, 170, s=f"average value: {a_0[100:].mean():.3f}", rotation=90, c="orange")

ax.set_ylabel("Node index")

ax.set_title(r"Adjacency vector for" + "\n" + " the oos node $a_0$");

# latent position plot

ax = fig.add_subplot(gs[:, 1:])

plot = plot_latents(X, ax=ax, labels=np.array(labels) + 1, title="Estimated latent positions with out-of-sample (oos) estimate")

plot.scatter(x=x_0[0], y=x_0[1], marker='*', s=300, edgecolor="black")

plot.annotate(r"Estimated latent position for" + "\n" + " the oos adjacency vector: $X^+ a_0$", xy=(x_0[0]+.002, x_0[1]+.008),

xytext=(x_0[0]-.02, x_0[1]+.1), arrowprops={"arrowstyle": "->", "color": "k"})

sns.move_legend(ax, "center right")

fig.subplots_adjust(wspace=.5)

plt.suptitle("Estimating the Out-of-Sample Latent Position", fontsize=18, y=1.1);

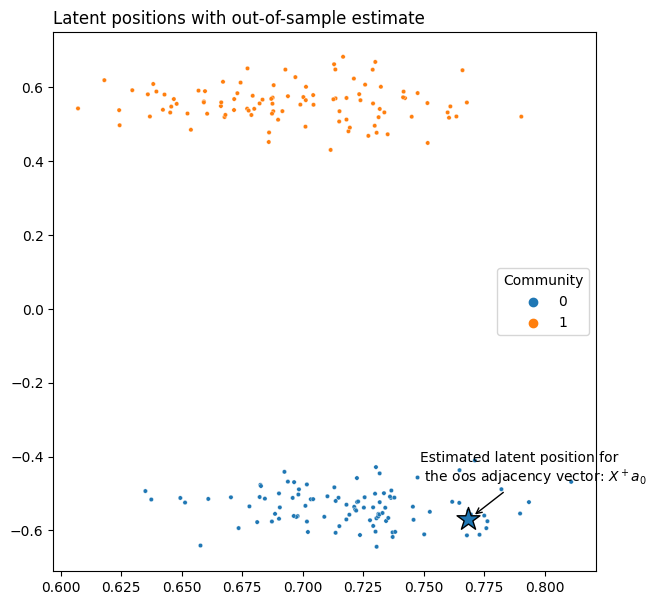

6.5.6. Out-of-sample embedding with graspologic#

Of course, you don’t have to do all of this manually. Below we generate an adjacency matrix \(A\) from an SBM, as well as the adjacency vector for an out-of-sample node \(a_0\). Once we fit an instance of the ASE class, the latent position for any new nodes can be predicted by simply calling ase.transform on the new adjacency vectors.

You can do the same thing with multiple out-of-sample nodes if you want by stacking their adjacency vectors on top of each other in a numpy array, then transforming the whole stack.

from graspologic.embed import AdjacencySpectralEmbed as ASE

# Make an ASE model

ase = ASE(n_components=2)

X = ase.fit_transform(A)

# Predict out-of-sample latent positions by transforming

x_0 = ase.transform(a_0)

fig, ax = plt.subplots(figsize=(7,7))

# latent position plot

plot = plot_latents(X, ax=ax, labels=labels, title="Latent positions with out-of-sample estimate")

plot.scatter(x=x_0[0], y=x_0[1], marker='*', s=300, edgecolor="black")

plot.annotate(r"Estimated latent position for" + "\n" + " the oos adjacency vector: $X^+ a_0$", xy=(x_0[0]+.002, x_0[1]+.008),

xytext=(x_0[0]-.02, x_0[1]+.1), arrowprops={"arrowstyle": "->", "color": "k"})

sns.move_legend(ax, "center right")

fig.subplots_adjust(wspace=.5)

6.5.7. References#

- 1

Keith Levin, Fred Roosta, Michael Mahoney, and Carey Priebe. Out-of-sample extension of graph adjacency spectral embedding. In International Conference on Machine Learning, pages 2975–2984. PMLR, July 2018. URL: https://proceedings.mlr.press/v80/levin18a.html.